这是一篇跟着官方教程走的深入文章,官方教程有6篇。现在这篇是 prediction_tutorial.ipynb,也就是第六篇,最后一篇了,链接:here

6篇教程分别如下:

- nuscenes_tutorial.ipynb

- nuscenes_lidarseg_panoptic_tutorial.ipynb

- nuimages_tutorial.ipynb

- can_bus_tutorial.ipynb

- map_expansion_tutorial.ipynb

- prediction_tutorial.ipynb

背景

这篇需要我们使用数据进行预测,工具还是 nuScenes devkit。这篇文章更像是一篇教你怎么使用数据进行预测,训练模型以及提交模型,总的来说,还是值得看的,所以跟着教程走一次。

准备工作

我们的数据集目录是/Users/lau/data_sets/nuscenes

启动 jupyter,创建 prediction.ipynb

pip install nuscenes-devkitfrom nuscenes import NuScenes

# This is the path where you stored your copy of the nuScenes dataset.

DATAROOT = '/Users/lau/data_sets/nuscenes'

nuscenes = NuScenes('v1.0-mini', dataroot=DATAROOT)

# 输出结果

======

Loading NuScenes tables for version v1.0-mini...

Loading nuScenes-lidarseg...

Loading nuScenes-panoptic...

32 category,

8 attribute,

4 visibility,

911 instance,

12 sensor,

120 calibrated_sensor,

31206 ego_pose,

8 log,

10 scene,

404 sample,

31206 sample_data,

18538 sample_annotation,

4 map,

404 lidarseg,

404 panoptic,

Done loading in 0.649 seconds.

======

Reverse indexing ...

Done reverse indexing in 0.1 seconds.

======预测挑战

数据拆分

nuScenes 预测挑战的目标是预测nuScenes数据集中代理(agents)的未来位置。代理通过实例标记(instance token)和样本标记(sample token)进行索引。为了获取挑战的训练集和验证集中的代理列表,我们提供了一个名为get_prediction_challenge_split的函数。

get_prediction_challenge_split函数返回一个字符串列表,格式为{instance_token}_{sample_token}。

from nuscenes.eval.prediction.splits import get_prediction_challenge_split

mini_train = get_prediction_challenge_split("mini_train", dataroot=DATAROOT)

mini_train[:5]

# 输出结果

['bc38961ca0ac4b14ab90e547ba79fbb6_39586f9d59004284a7114a68825e8eec',

'bc38961ca0ac4b14ab90e547ba79fbb6_356d81f38dd9473ba590f39e266f54e5',

'bc38961ca0ac4b14ab90e547ba79fbb6_e0845f5322254dafadbbed75aaa07969',

'bc38961ca0ac4b14ab90e547ba79fbb6_c923fe08b2ff4e27975d2bf30934383b',

'bc38961ca0ac4b14ab90e547ba79fbb6_f1e3d9d08f044c439ce86a2d6fcca57b']未来/过去数据

我们提供了一个名为PredictHelper的类,该类提供了查询代理过去和未来数据的方法。该类通过包装NuScenes类的实例进行实例化。

from nuscenes.prediction import PredictHelper

helper = PredictHelper(nuscenes)要在特定时间点获取代理的数据,请使用get_sample_annotation方法。

instance_token, sample_token = mini_train[0].split("_")

annotation = helper.get_sample_annotation(instance_token, sample_token)

annotation

# 输出结果

{'token': 'a286c9633fa34da5b978758f348996b0',

'sample_token': '39586f9d59004284a7114a68825e8eec',

'instance_token': 'bc38961ca0ac4b14ab90e547ba79fbb6',

'visibility_token': '4',

'attribute_tokens': ['cb5118da1ab342aa947717dc53544259'],

'translation': [392.945, 1148.426, 0.766],

'size': [1.708, 4.01, 1.631],

'rotation': [-0.5443682117180475, 0.0, 0.0, 0.8388463804957943],

'prev': '16140fbf143d4e26a4a7613cbd3aa0e8',

'next': 'b41e15b89fd44709b439de95dd723617',

'num_lidar_pts': 0,

'num_radar_pts': 0,

'category_name': 'vehicle.car'}要获取代理的未来/过去数据,请使用get_past_for_agent/get_future_for_agent方法。如果将in_agent_frame参数设置为true,则坐标将以代理的局部坐标系为准。否则,坐标将以全局坐标系为准。

future_xy_local = helper.get_future_for_agent(instance_token, sample_token, seconds=3, in_agent_frame=True)

future_xy_local

# 输出结果

array([[ 0.01075063, 0.2434942 ],

[-0.20463666, 1.20515386],

[-0.20398583, 2.57851309],

[-0.25867757, 4.50313379],

[-0.31359088, 6.67620961],

[-0.31404147, 9.67727022]])代理的坐标系以代理当前位置为中心,代理的朝向与正Y轴对齐。例如,future_xy_local中的最后一个坐标对应于代理起始位置前方9.67米,左侧0.31米的位置。

future_xy_global = helper.get_future_for_agent(instance_token, sample_token, seconds=3, in_agent_frame=False)

future_xy_global

# 输出结果

array([[ 392.836, 1148.208],

[ 392.641, 1147.242],

[ 392.081, 1145.988],

[ 391.347, 1144.208],

[ 390.512, 1142.201],

[ 389.29 , 1139.46 ]])请注意,我们还可以通过传递just_xy=False来返回整个注释记录。但是,在这种情况下,将不考虑in_agent_frame参数。

helper.get_future_for_agent(instance_token, sample_token, seconds=3, in_agent_frame=True, just_xy=False)

# 输出结果

[{'token': 'b41e15b89fd44709b439de95dd723617',

'sample_token': '356d81f38dd9473ba590f39e266f54e5',

'instance_token': 'bc38961ca0ac4b14ab90e547ba79fbb6',

'visibility_token': '4',

'attribute_tokens': ['cb5118da1ab342aa947717dc53544259'],

'translation': [392.836, 1148.208, 0.791],

'size': [1.708, 4.01, 1.631],

'rotation': [-0.5443682117180475, 0.0, 0.0, 0.8388463804957943],

'prev': 'a286c9633fa34da5b978758f348996b0',

'next': 'b2b43ef63f5242b2a4c0b794e673782d',

'num_lidar_pts': 10,

'num_radar_pts': 2,

'category_name': 'vehicle.car'},

{'token': 'b2b43ef63f5242b2a4c0b794e673782d',

'sample_token': 'e0845f5322254dafadbbed75aaa07969',

'instance_token': 'bc38961ca0ac4b14ab90e547ba79fbb6',

'visibility_token': '4',

'attribute_tokens': ['cb5118da1ab342aa947717dc53544259'],

'translation': [392.641, 1147.242, 0.816],

'size': [1.708, 4.01, 1.631],

'rotation': [-0.5443682117180475, 0.0, 0.0, 0.8388463804957943],

'prev': 'b41e15b89fd44709b439de95dd723617',

'next': '7bcf4bc87bf143588230254034eada12',

'num_lidar_pts': 13,

'num_radar_pts': 3,

'category_name': 'vehicle.car'},

{'token': '7bcf4bc87bf143588230254034eada12',

'sample_token': 'c923fe08b2ff4e27975d2bf30934383b',

'instance_token': 'bc38961ca0ac4b14ab90e547ba79fbb6',

'visibility_token': '4',

'attribute_tokens': ['cb5118da1ab342aa947717dc53544259'],

'translation': [392.081, 1145.988, 0.841],

'size': [1.708, 4.01, 1.631],

'rotation': [-0.5443682117180475, 0.0, 0.0, 0.8388463804957943],

'prev': 'b2b43ef63f5242b2a4c0b794e673782d',

'next': '247a25c59f914adabee9460bd8307196',

'num_lidar_pts': 18,

'num_radar_pts': 3,

'category_name': 'vehicle.car'},

{'token': '247a25c59f914adabee9460bd8307196',

'sample_token': 'f1e3d9d08f044c439ce86a2d6fcca57b',

'instance_token': 'bc38961ca0ac4b14ab90e547ba79fbb6',

'visibility_token': '4',

'attribute_tokens': ['cb5118da1ab342aa947717dc53544259'],

'translation': [391.347, 1144.208, 0.841],

'size': [1.708, 4.01, 1.631],

'rotation': [-0.5443682117180475, 0.0, 0.0, 0.8388463804957943],

'prev': '7bcf4bc87bf143588230254034eada12',

'next': 'e25b9e7019814d53876ff2697df7a2de',

'num_lidar_pts': 20,

'num_radar_pts': 4,

'category_name': 'vehicle.car'},

{'token': 'e25b9e7019814d53876ff2697df7a2de',

'sample_token': '4f545737bf3347fbbc9af60b0be9a963',

'instance_token': 'bc38961ca0ac4b14ab90e547ba79fbb6',

'visibility_token': '4',

'attribute_tokens': ['cb5118da1ab342aa947717dc53544259'],

'translation': [390.512, 1142.201, 0.891],

'size': [1.708, 4.01, 1.631],

'rotation': [-0.5443682117180475, 0.0, 0.0, 0.8388463804957943],

'prev': '247a25c59f914adabee9460bd8307196',

'next': 'fe33c018573e4abda3ff8de0566ee800',

'num_lidar_pts': 24,

'num_radar_pts': 2,

'category_name': 'vehicle.car'},

{'token': 'fe33c018573e4abda3ff8de0566ee800',

'sample_token': '7626dde27d604ac28a0240bdd54eba7a',

'instance_token': 'bc38961ca0ac4b14ab90e547ba79fbb6',

'visibility_token': '4',

'attribute_tokens': ['cb5118da1ab342aa947717dc53544259'],

'translation': [389.29, 1139.46, 0.941],

'size': [1.708, 4.01, 1.631],

'rotation': [-0.5443682117180475, 0.0, 0.0, 0.8388463804957943],

'prev': 'e25b9e7019814d53876ff2697df7a2de',

'next': '2c1a8ae13d76498c838a1fb733ff8700',

'num_lidar_pts': 30,

'num_radar_pts': 2,

'category_name': 'vehicle.car'}]如果我们想返回整个样本的数据,而不仅仅是样本中的一个代理,可以使用get_annotations_for_sample方法。这将返回样本中每个注释代理的记录列表。

sample = helper.get_annotations_for_sample(sample_token)

len(sample)

# 输出结果

78请注意,还有get_future_for_sample和get_past_for_sample方法,类似于get_future_for_agent和get_past_for_agent方法。

我们还提供了计算代理在给定时间点的速度、加速度和航向变化率的方法。

# We get new instance and sample tokens because these methods require computing the difference between records.

instance_token_2, sample_token_2 = mini_train[5].split("_")

# Meters / second.

print(f"Velocity: {helper.get_velocity_for_agent(instance_token_2, sample_token_2)}\n")

# Meters / second^2.

print(f"Acceleration: {helper.get_acceleration_for_agent(instance_token_2, sample_token_2)}\n")

# Radians / second.

print(f"Heading Change Rate: {helper.get_heading_change_rate_for_agent(instance_token_2, sample_token_2)}")

# 输出结果

Velocity: 4.385040264738063

Acceleration: 0.30576530453207523

Heading Change Rate: 0.0地图 API

我们在地图 API 中添加了几个方法,以帮助查询车道中心线信息。

from nuscenes.map_expansion.map_api import NuScenesMap

nusc_map = NuScenesMap(map_name='singapore-onenorth', dataroot=DATAROOT)在地图 API 中进行了如下更改:

- 使用

get_closest_lane方法可以获取最接近某个位置的车道。 - 使用

get_lane_record方法可以查看车道的内部数据表示。 - 还可以通过

get_outgoing_lanes和get_incoming_lane方法探索车道的连接性。

x, y, yaw = 395, 1095, 0

closest_lane = nusc_map.get_closest_lane(x, y, radius=2)

closest_lane

# 输出结果

'5933500a-f0f2-4d69-9bbc-83b875e4a73e'lane_record = nusc_map.get_arcline_path(closest_lane)

lane_record

# 输出结果

[{'start_pose': [421.2419602954602, 1087.9127960414617, 2.739593514975998],

'end_pose': [391.7142849867393, 1100.464077182952, 2.7365754617298705],

'shape': 'LSR',

'radius': 999.999,

'segment_length': [0.23651121617864976,

28.593481378991886,

3.254561444252876]}]nusc_map.get_incoming_lane_ids(closest_lane)

# 输出结果

['f24a067b-d650-47d0-8664-039d648d7c0d']nusc_map.get_outgoing_lane_ids(closest_lane)

# 输出结果

['0282d0e3-b6bf-4bcd-be24-35c9ce4c6591',

'28d15254-0ef9-48c3-9e06-dc5a25b31127']为了帮助操控车道,我们添加了一个arcline_path_utils模块。例如,我们可能想要将车道离散化为一系列姿态。

from nuscenes.map_expansion import arcline_path_utils

poses = arcline_path_utils.discretize_lane(lane_record, resolution_meters=1)

poses

# 输出结果

[(421.2419602954602, 1087.9127960414617, 2.739593514975998),

(420.34712994585345, 1088.2930152148274, 2.739830026428688),

(419.45228865726136, 1088.6732086473173, 2.739830026428688),

(418.5574473686693, 1089.0534020798073, 2.739830026428688),

(417.66260608007724, 1089.433595512297, 2.739830026428688),

(416.76776479148515, 1089.813788944787, 2.739830026428688),

(415.87292350289306, 1090.1939823772768, 2.739830026428688),

(414.97808221430097, 1090.5741758097668, 2.739830026428688),

(414.0832409257089, 1090.9543692422567, 2.739830026428688),

(413.1883996371168, 1091.3345626747464, 2.739830026428688),

(412.29355834852475, 1091.7147561072363, 2.739830026428688),

(411.39871705993266, 1092.0949495397263, 2.739830026428688),

(410.5038757713406, 1092.4751429722162, 2.739830026428688),

(409.6090344827485, 1092.8553364047061, 2.739830026428688),

(408.7141931941564, 1093.2355298371958, 2.739830026428688),

(407.81935190556436, 1093.6157232696858, 2.739830026428688),

(406.92451061697227, 1093.9959167021757, 2.739830026428688),

(406.0296693283802, 1094.3761101346656, 2.739830026428688),

(405.1348280397881, 1094.7563035671556, 2.739830026428688),

(404.239986751196, 1095.1364969996453, 2.739830026428688),

(403.3451454626039, 1095.5166904321352, 2.739830026428688),

(402.4503041740119, 1095.8968838646251, 2.739830026428688),

(401.5554628854198, 1096.277077297115, 2.739830026428688),

(400.6606215968277, 1096.657270729605, 2.739830026428688),

(399.7657803082356, 1097.0374641620947, 2.739830026428688),

(398.8709390196435, 1097.4176575945846, 2.739830026428688),

(397.9760977310515, 1097.7978510270746, 2.739830026428688),

(397.0812564424594, 1098.1780444595645, 2.739830026428688),

(396.1864151538673, 1098.5582378920544, 2.739830026428688),

(395.2915738652752, 1098.9384313245444, 2.739830026428688),

(394.3967548911081, 1099.318677260896, 2.739492242286598),

(393.5022271882191, 1099.69960782173, 2.738519982101022),

(392.60807027168346, 1100.0814079160527, 2.737547721915446),

(391.71428498673856, 1100.4640771829522, 2.7365754617298705)]在给定查询姿态的情况下,还可以找到车道上最接近的姿态。

closest_pose_on_lane, distance_along_lane = arcline_path_utils.project_pose_to_lane((x, y, yaw), lane_record)

print(x, y, yaw)

closest_pose_on_lane

# 输出结果

395 1095 0

(396.25524909914367, 1098.5289922434013, 2.739830026428688)# Meters.

distance_along_lane

# 输出结果

27.5要找到整个车道的长度,可以使用length_of_lane函数。

arcline_path_utils.length_of_lane(lane_record)

# 输出结果

32.08455403942341还可以计算车道在车道上给定距离处的曲率。

# 0 means it is a straight lane.

arcline_path_utils.get_curvature_at_distance_along_lane(distance_along_lane, lane_record)

# 输出结果

0输入表示

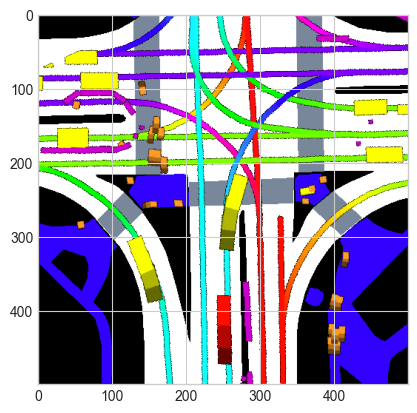

在预测领域中,通常将代理的状态表示为一个张量,其中包含有关语义地图(如可行驶区域和人行道)以及周围代理的过去位置的信息。

该领域中的每篇论文选择以稍微不同的方式表示输入。例如,CoverNet和MTP选择将地图信息和代理位置栅格化为三通道RGB图像。但是,《Rules of the Road》(http://openaccess.thecvf.com/content_CVPR_2019/papers/Hong_Rules_of_the_Road_Predicting_Driving_Behavior_With_a_Convolutional_CVPR_2019_paper.pdf)决定使用一个"更高"的张量,其中信息以不同的通道表示。

我们提供了一个名为input_representation的模块,旨在帮助您定义自己的输入表示。简而言之,您需要定义自己的StaticLayerRepresentation、AgentRepresentation和Combinator。

StaticLayerRepresentation控制静态地图信息的表示方式。AgentRepresentation控制场景中代理的位置表示方式。Combinator控制如何将这两种信息源合并为一个单独的张量。

有关更多信息,请参考input_representation/interface.py。

此外,我们提供了CoverNet和MTP中使用的输入表示的实现。

import matplotlib.pyplot as plt

%matplotlib inline

from nuscenes.prediction.input_representation.static_layers import StaticLayerRasterizer

from nuscenes.prediction.input_representation.agents import AgentBoxesWithFadedHistory

from nuscenes.prediction.input_representation.interface import InputRepresentation

from nuscenes.prediction.input_representation.combinators import Rasterizer

static_layer_rasterizer = StaticLayerRasterizer(helper)

agent_rasterizer = AgentBoxesWithFadedHistory(helper, seconds_of_history=1)

mtp_input_representation = InputRepresentation(static_layer_rasterizer, agent_rasterizer, Rasterizer())

instance_token_img, sample_token_img = 'bc38961ca0ac4b14ab90e547ba79fbb6', '7626dde27d604ac28a0240bdd54eba7a'

anns = [ann for ann in nuscenes.sample_annotation if ann['instance_token'] == instance_token_img]

img = mtp_input_representation.make_input_representation(instance_token_img, sample_token_img)

plt.imshow(img)

模型加强

我们提供了CoverNet和MTP的PyTorch实现。下面我们展示如何对先前创建的输入表示进行预测。

from nuscenes.prediction.models.backbone import ResNetBackbone

from nuscenes.prediction.models.mtp import MTP

from nuscenes.prediction.models.covernet import CoverNet

import torchbackbone = ResNetBackbone('resnet50')

mtp = MTP(backbone, num_modes=2)

# Note that the value of num_modes depends on the size of the lattice used for CoverNet.

covernet = CoverNet(backbone, num_modes=64)agent_state_vector = torch.Tensor([[helper.get_velocity_for_agent(instance_token_img, sample_token_img),

helper.get_acceleration_for_agent(instance_token_img, sample_token_img),

helper.get_heading_change_rate_for_agent(instance_token_img, sample_token_img)]])image_tensor = torch.Tensor(img).permute(2, 0, 1).unsqueeze(0)# CoverNet outputs a probability distribution over the trajectory set.

# These are the logits of the probabilities

logits = covernet(image_tensor, agent_state_vector)

print(logits)#import pickle

# Epsilon is the amount of coverage in the set,

# i.e. a real world trajectory is at most 8 meters from a trajectory in this set

# We released the set for epsilon = 2, 4, 8. Consult the paper for more information

# on how this set was created

#PATH_TO_EPSILON_8_SET = ""

#trajectories = pickle.load(open(PATH_TO_EPSILON_8_SET, 'rb'))

# Saved them as a list of lists

#trajectories = torch.Tensor(trajectories)

# Print 5 most likely predictions

#trajectories[logits.argsort(descending=True)[:5]]from nuscenes.prediction.models.physics import ConstantVelocityHeading, PhysicsOracle

cv_model = ConstantVelocityHeading(sec_from_now=6, helper=helper)

physics_oracle = PhysicsOracle(sec_from_now=6, helper=helper)cv_model(f"{instance_token_img}_{sample_token_img}")physics_oracle(f"{instance_token_img}_{sample_token_img}")提交结果

前面的部分介绍了Prediction数据类型。在本部分,我们将详细解释其格式。

一个Prediction包含四个字段:

- instance:代理的实例标记。

- sample:代理的样本标记。

- prediction:模型的预测结果。一个预测可以包含多达25条候选轨迹。该字段必须是一个具有三个维度的NumPy数组(轨迹数(也称为模式数),时间步数,2)。

- probabilities:与每个预测模式对应的概率。这是一个形状为

(模式数,)的NumPy数组。

from nuscenes.eval.prediction.data_classes import Prediction

import numpy as np# This would raise an error because instance is not a string.

Prediction(instance=1, sample=sample_token_img,

prediction=np.ones((1, 12, 2)), probabilities=np.array([1]))# This would raise an error because sample is not a string.

Prediction(instance=instance_token_img, sample=2,

prediction=np.ones((1, 12, 2)), probabilities=np.array([1]))# This would raise an error because prediction is not a numpy array.

Prediction(instance=instance_token_img, sample=sample_token_img,

prediction=np.ones((1, 12, 2)).tolist(), probabilities=np.array([1]))# This would throw an error because probabilities is not a numpy array. Uncomment to see.

Prediction(instance=instance_token_img, sample=sample_token_img,

prediction=np.ones((1, 12, 2)), probabilities=[0.3])# This would throw an error because there are more than 25 predicted modes. Uncomment to see.

Prediction(instance=instance_token_img, sample=sample_token_img,

prediction=np.ones((30, 12, 2)), probabilities=np.array([1/30]*30))# This would throw an error because the number of predictions and probabilities don't match. Uncomment to see.

Prediction(instance=instance_token_img, sample=sample_token_img,

prediction=np.ones((13, 12, 2)), probabilities=np.array([1/12]*12))要向挑战提交结果,需要将模型预测存储在Python列表中,并将其保存为JSON文件。然后,将文件进行压缩,并上传至评估服务器。

示例请参考eval/prediction/baseline_model_inference.py文件。

参考

- https://colab.research.google.com/github/nutonomy/nuscenes-devkit/blob/master/python-sdk/tutorials/prediction_tutorial.ipynb

- https://github.com/nutonomy/nuscenes-devkit/tree/master/python-sdk/nuscenes/prediction

本文由 Chakhsu Lau 创作,采用 知识共享署名4.0 国际许可协议进行许可。

本站文章除注明转载/出处外,均为本站原创或翻译,转载前请务必署名。

嗯嗯嗯嗯嗯嗯

哈哈哈我来看看

我就是来看看的